-

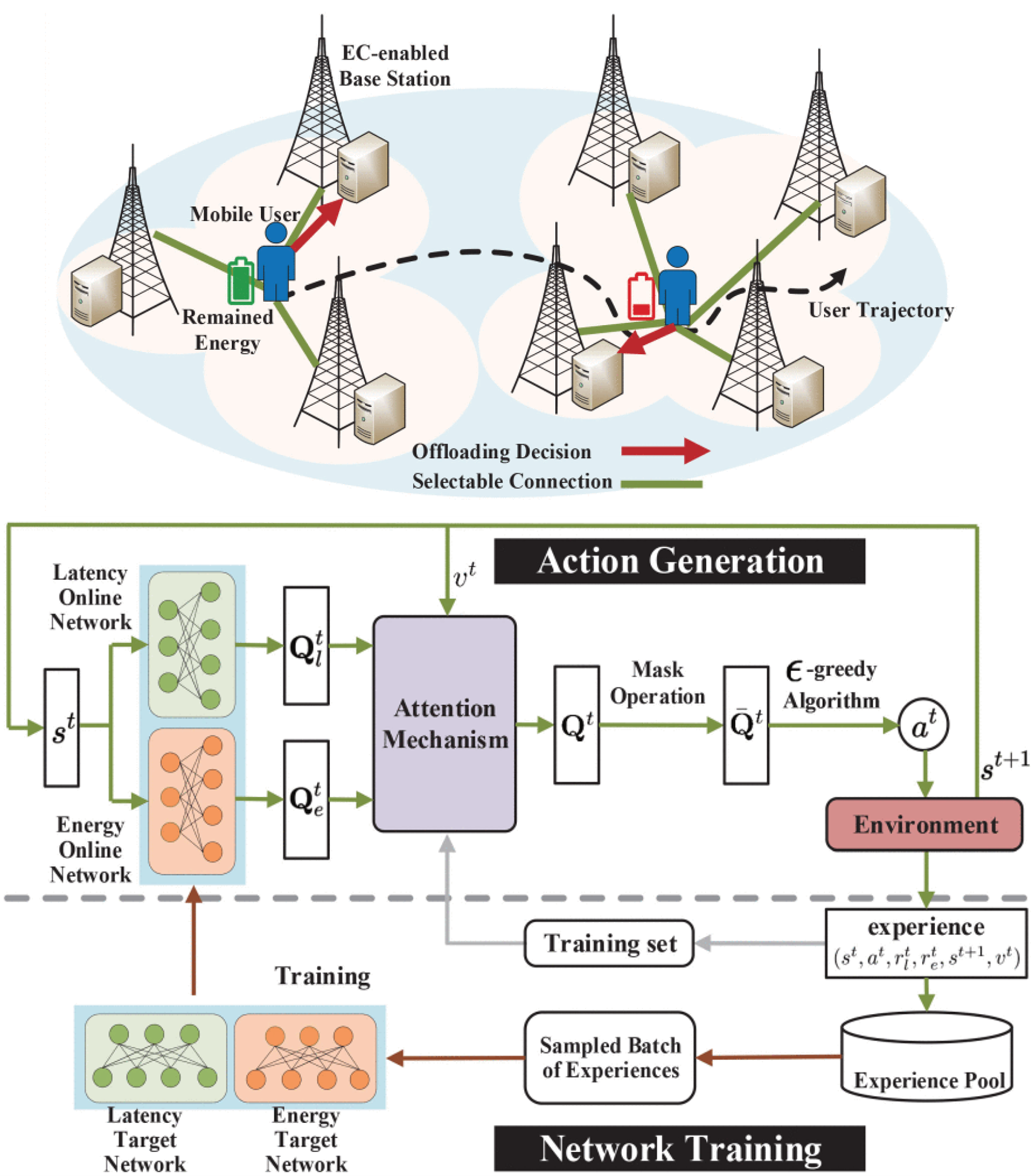

Abstract——With the explosion of mobile smart devices, many computation intensive applications have emerged, such as interactive gaming and augmented reality. Mobile-edge computing (EC) is put forward, as an extension of cloud computing, to meet the low-latency requirements of the applications. In this article, we consider an EC system built in an ultradense network with numerous base stations. Heterogeneous computation tasks are successively generated on a smart device moving in the network. An optimal task offloading strategy, as well as optimal CPU frequency and transmit power scheduling, is desired by the device user to minimize both task completion latency and energy consumption in a long term. However, due to the stochastic task generation and dynamic network conditions, the problem is particularly difficult to solve. Inspired by reinforcement learning, we transform the problem into a Markov decision process. Then, we propose an attention-based double deep Q network (DDQN) approach, in which two neural networks are employed to estimate the cumulative latency and energy rewards achieved by each action. Moreover, a context-aware attention mechanism is designed to adaptively assign different weights to the values of each action. We also conduct extensive simulations to compare the performance of our proposed approach with several heuristic and DDQN-based baselines.

-

Abstract——Due to the development of 5G networks, computation intensive applications on mobile devices have emerged, such as augmented reality and video stream analysis. Mobile edge computing is put forward as a new computing paradigm, to meet the low-latency requirements of applications, by moving services from the cloud to the network edge like base stations. Due to the limited storage space and computing capacity of an edge server, service placement is an important issue, determining which services are deployed at edge to serve corresponding tasks. The problem becomes particularly complicated, with considering the stochastic arrivals of tasks, the additional latency incurred by service migration, and the time spent for waiting in queues for processing at edge. Benefiting from reinforcement learning, we propose a deep Q network based approach, by formulating service placement as a Markov decision process. Real-time service placement strategies are output, to minimize the total latency of arrived tasks in a long term. Extensive simulation results demonstrate that our approach works effectively.

<

>